Abstract

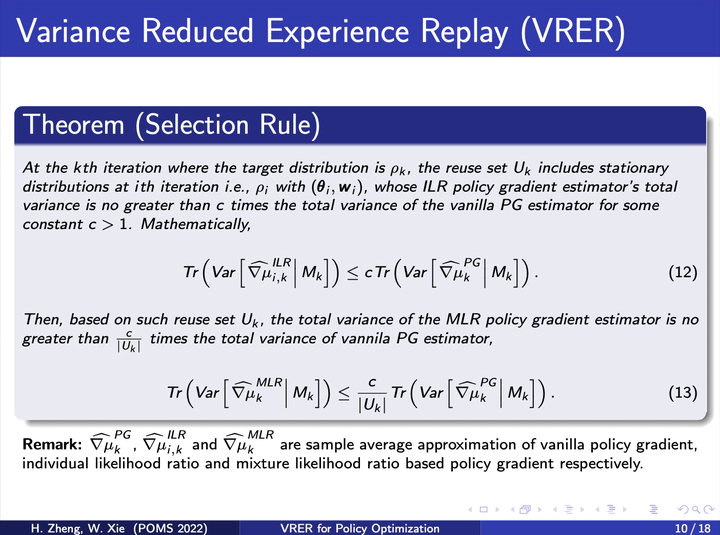

We extend the idea underlying the success of green simulation assisted policy gradient (GS-PG) to partial historical trajectory reuse for infinite-horizon Markov Decision Processes (MDP). The existing GS-PG method was designed to learn from complete episodes, which limits its applicability to low-data environment and online process control. In this paper, we present the mixture likelihood ratio (MLR) based policy gradient estimation which can leverage the information from historical state transitions generated under different behavioral policies. We propose a variance reduced experience replay (VRER) method that can intelligently select and reuse most relevant transition observations, improve the policy gradient estimation accuracy, and accelerate the learning of optimal and robust policy. Then we create a algorithm by incorporating VRER with the state-of-the-art step-based policy optimization algorithms such as actor-critic method and proximal policy optimizations. The empirical study demonstrates that the proposed policy gradient methodology can significantly outperform the existing policy optimization approaches.